People-literate tech, why we need the human touch

Technology will need to understand human behaviour and the complexities of interfaces such as speech for it to integrate seamlessly into society.

We have learnt to use ever more complex mapping systems, navigation systems and revolutionary modes of transport. The future of mobility, however, will rely on more than just new technologies. We must also look at the intersection between location-based technology and human beings. Transport technology will need to understand human behaviour and the complexities of interfaces such as speech for it to integrate seamlessly into society.

Adapting technology in this way involves a field known as human factors, which examines the relationship between humans and the systems with which they interact. The challenge is to represent the complexity of the human experience of space and place in a way that a machine can use to interact with a human and compute with. For example, consider the boundaries of a neighbourhood like London’s Soho – where the edges of this area lie are very subjective, depending on the experience of people who visit, live and work or even simply hear about the place.

Equally, if you instruct an autonomous vehicle to take you “through the park on the way home from work”, how does the vehicle comprehend the command to go ‘through the park’? Does this mean via the fastest route across the lawns, along the road through the middle, or by the more scenic route? How does it understand the personal context of ‘work’ and ‘home’?

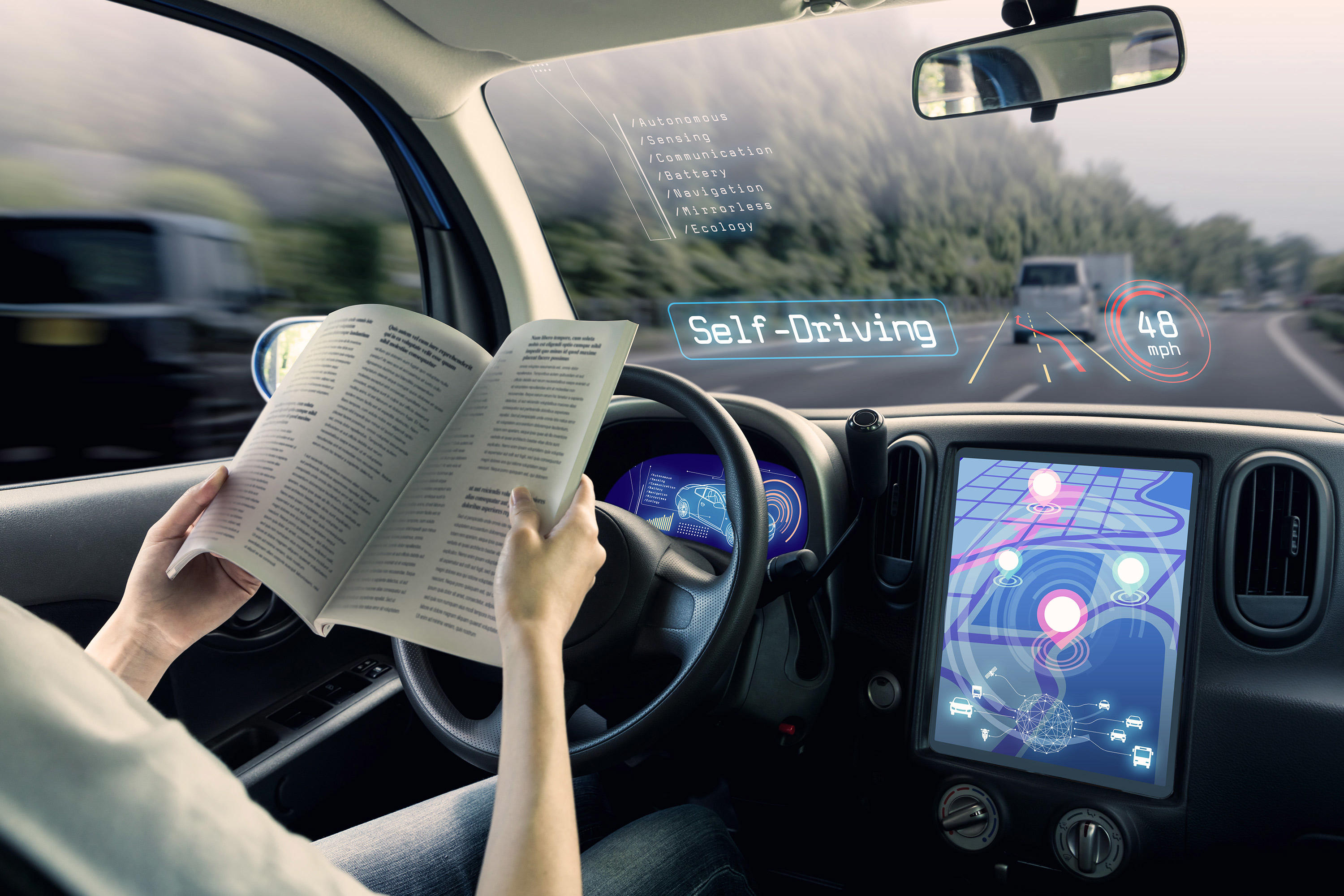

Another scenario where human factors and the usability of technology comes into play is one where your autonomous vehicle has been driving you for an hour or two while you doze or read a book. You are not really paying attention to where you are. Suddenly, the vehicle runs into a fog bank and has to hand back control to you. Once you’re past the surprise and have resumed driving, how do you know where you are on your journey and what road you’re on? The machine needs to understand when and how to provide that navigational context. Is that by a heads-up display sat nav-style map? Or by telling you verbally? This is a focus of research at the University of Nottingham.

Training intelligent vehicles to understand human instructions and to adapt to the way humans communicate and behave is vital to ensuring new technologies are accessible to everyone and is being explored in a number of ways. Natural language processing, the computational modelling of human linguistics, is being used to examine the spatial elements of language, combined with experimental and neuro-psychology into how humans understand space and networks. Additionally, crowdsourcing is being used as a tool to understand geography from the human perspective, beyond the numbers and distances associated with a location.

For example, the Maritime and Coastguard Agency (MCA) relies heavily on the interaction between a coast guard operator and the person making the phone call to identify the location of an incident and so to carry out a rescue. However, the calls are often centralised and can be received by an agent anywhere in the country. The operator taking the call may not have local knowledge of the exact place where the rescue team is needed, and the caller may use nicknames or informal geographic labels. When it comes to describing the location of the person in need there can be miscommunication and confusion, potentially leading to life-threatening delays.

The solution, a project called FINTAN, involved combining OS data with crowd-sourced local knowledge of the geography along the coastline, so the coastguard could collate informal names for landmarks and locations around the country. By visualising on a map interface, it allows the MCA to quickly decipher ambiguous descriptions. This increases response times and minimises the crucial time spent determining the precise locations where rescue is needed, ultimately saving lives. It essentially means giving digital maps a human face.

The transport technologies on the horizon have the potential to revolutionise mobility, as they have in decades before. Society’s adaptation to these innovations must be seamless. This can be achieved, from the start, by ensuring human behaviour does not have to change drastically in order to use them, creating ‘people-literate’ tech.

By combining location data with knowledge from natural language processing, crowdsourced information, and human psychology then intelligent transport systems will be accessible to everyone. And provide the social and economic benefits they have the potential to offer. Applying the human touch to digital maps will ensure future transport technology adapts to and works for the people it is designed to serve.

Chief Geospatial Scientist

Jeremy is Chief Geospatial Scientist at Ordnance Survey. He commissions geospatial research including 25 PhD and postdoc projects and runs a research team which looks at topics affecting future geospatial services.